How many flying hours, steaming days or tank miles does it take to kill a terrorist?

How many flying hours, steaming days or tank miles does it take to kill a terrorist?I sometimes ask this rhetorical question to people in the military to make a point. Training, while essential to preparing our forces for combat, is an intermediary step toward an end goal. The purpose of our military is not to fly aircraft, sail ships or drive around in tanks. The military exists to deter, fight and win the nation’s wars. So when we think about military readiness we should be thinking about how well our forces are able to do their job.

A few months ago I published an article on this subject in Strategic Studies Quarterly entitled “Rethinking Readiness." One of the main points I make in the article pertains to the importance of distinguishing between readiness inputs (resources) and outputs (performance). My article clearly touched a nerve with some in the military, as is evident in a two-page memo by a Marine Corps officer criticizing it. The author of the memo seems to agree that the military should be measuring outputs but misses the distinction between inputs and outputs, writing that the military already has “an objective readiness output measure (P/R/S/T-levels).” For those who aren’t familiar with this terminology, he is referencing how the military reports readiness on a unit-by-unit basis in four resource categories: P-level for personnel, R-level for equipment condition, S-level for equipment and supplies and T-level for training. But these are not outputs; these are the essential inputs to readiness. And no matter the degree of fidelity with which the P/R/S/T-levels are reported, they are still measures of the resources applied to readiness, not the actual readiness that results.

But don’t take my word for it; it says so in the Defense Department’s “Guide to the Chairman’s Readiness System.” On pages 9-10 of this manual it says, “Commanders use resource data to report unit readiness,” (emphasis in the original text) and readiness levels reflect the “unit resources measured against the resources required.” It goes on to say that these readiness levels “by themselves, do not project a unit's performance.” My point is we should try to measure the outputs (performance) more directly rather than using inputs (resources) as a proxy for readiness.

What are some examples of readiness outputs we could measure? In the article, I use a fighter squadron as an example—admittedly because it is easier to conceptualize performance measures for this type of unit. The table below presents a few specific examples for a fighter squadron. Keep in mind, this is only an example and is not intended to be an exhaustive list.

| Examples of Readiness Inputs | Examples of Readiness Outputs |

| Flying Hours (T-level) | Average bomb miss distance (low altitude) |

| Skilled Pilots (P-level) | Average bomb miss distance (high altitude) |

| Skilled Maintainers (P-level) | Air-to-air missile hit rate |

| Functioning Aircraft (R-level) | Sortie generation rate |

| Spare Parts (S-level) | Formation flying |

| Munitions (S-level) | Aerial refueling disconnect rate |

The inputs on the left certainly affect the outputs on the right. But is it a linear relationship? In other words, if I cut the flying hours in half does the air-to-air missile hit rate fall by half? Is there a time lag between cuts in training and drops in performance? Could more skilled maintainers substitute for a shortage of spare parts? We can’t begin to unravel these important questions unless we are measuring both the inputs and outputs.

Output metrics like I am suggesting already exist within the Defense Department. When a fighter squadron goes through a red flag or green flag exercise, for example, they are tested in many of these areas. When pilots go through weapon systems school they are also evaluated on their performance. I am merely suggesting that such metrics be systematically collected, reported and used to assess readiness. In fairness, commanders already do this indirectly through self-assessments. The point I make in the article is that we need output metrics with “a greater level of fidelity” than these self-assessments and based on “objective measures whenever possible.” I am not questioning the integrity of commanders or their professional military judgment. I am simply saying that self-assessments are inherently subject to bias (conscious or unconscious) and can be applied differently from person to person and unit to unit. Moreover, the fidelity of these self-assessments in the current readiness reporting system (recorded as “yes,” “qualified yes,” and “no”) does not offer enough differentiation between levels of readiness to understand how a slight change in inputs affects the output.

While objective measures like average bomb miss distance are ideal, in many cases this may not be possible. For example, the performance of a Marine Corps unit conducting an amphibious assault is not something that lends itself naturally to objective measures. Likewise, in the fighter squadron example above, formation flying skills cannot be assessed using purely objective measures. When that is the case, the military can use subjective measures based on objective standards, such as scoring units on a ten-point scale for various performance attributes using an independent evaluator.

Readiness At What Price?

Keep in mind that the whole purpose of measuring outputs is to know if we are adequately funding the right inputs to achieve the desired level of readiness. This gets to the heart of the other main critique, that my approach places “too great an emphasis on the ‘science’ and too little on the ‘art’ of readiness reporting.” I do not make such a distinction between art and science in the article because I think the two are inherently intertwined. Determining the right readiness output metrics (i.e. what you expect the military to be able to do) and how well it must perform in each area is fundamentally a matter of strategy, and strategy is certainly more art than science. Military judgment and experience is essential for choosing the appropriate outputs to measure. Understanding how to apply resources most effectively to achieve the desired output, however, is a matter of resource management and more science than art.

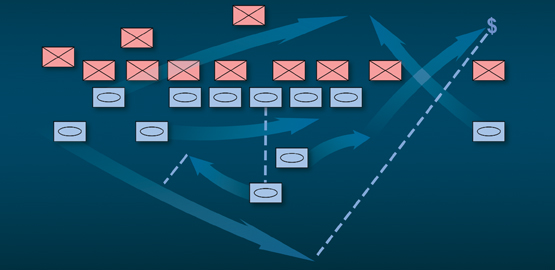

The approach I propose for understanding how inputs can be most effectively applied to achieve the desired outputs is one that has been widely applied to complex problems in science, business and the social sciences. It is an approach that is even touted within the military in other contexts, such as technology development and war gaming. It’s the idea of using experiments to test hypotheses. I am not the first to suggest this; analysts at the Congressional Budget Office recommended using experiments to better understand readiness in a 2011 report.

To be clear, the use of science, or controlled experiments, is not a substitute for the art of readiness. As I state in the article, “the accumulated wisdom resident in today’s military for what is needed to produce a ready force should not be dismissed or disregarded. Rather, it should be the starting point for developing a more robust and adaptive method for resourcing readiness.” Subjecting readiness theories and hypotheses to testing does not diminish the “art” of readiness—it supplements it with hard evidence of what works most effectively.

The criticism in the Marine Corps memo also includes some provocative language, such as “Mr. Harrison charges that the DOD incorrectly measures readiness and, in effect, haphazardly applies resources to achieve readiness.” Nowhere in the article do I “charge” that the military is measuring readiness “incorrectly” or “inaccurately,” nor do I say that resources are applied “haphazardly.” Such absolute statements miss the nuance of my argument. It’s not that current readiness measurements are incorrect or inaccurate, the point is that they are incomplete. And without good measures of readiness outputs, how can we be confident resources are being applied most effectively? I’m suggesting that the military could do better, which does not mean the military is doing a poor job now. In fact I go out of my way to make this point: “The current balance of inputs…was crafted through years of war-fighting experience, and these inputs appear to work, as is evident by the high performance of U.S. forces in recent military operations.” That hardly suggests resources are being applied haphazardly.

One of the most common criticisms I have received is that my approach would cost money at a time when funding is already tight: “his solution would introduce a costly redesign of a [sic] the readiness reporting system and disadvantage units to test hypotheses.” It is true that I am calling for changes to the current readiness reporting system, including changes to the quarterly readiness reports to Congress. Given the enormous amount of work required for the current quarterly reports to Congress, streamlining these reports to focus only on key performance parameters could actually result in less work for Defense Department officials and a more useful product for members of Congress. And yes, testing hypotheses does mean that some units would receive fewer resources than others. But readiness resources are already being cut. I would simply use this opportunity to make cuts in a way that will generate useful data (e.g. randomly assigning units to test and control groups and tracking the results). This does not mean more units would have their resources cut—it would just change the way cuts are allocated.

Perhaps the biggest issue I have with the memo is the combative tone it takes from the beginning. One needs to read no further than the stationary heading to see what I mean: “A Strategic Scouting Report…informing you to better fight the Battle of the Beltway.” The memo appears to be written to help senior military leaders “fight” the ideas in my article (which implies that my ideas are the enemy). But it also highlights a deeper problem—the military’s fierce resistance to change. Throughout the article I use the story of the Oakland A’s and the “moneyball” revolution in baseball as an analogy for how the military could revolutionize its approach to readiness. Due to space constraints, I cut two paragraphs from the final version of the article published in Strategic Studies Quarterly, but given the circumstances I wish I had left this in:

In many ways, Michael Lewis’ story of the Oakland A’s and the “sabermetrics” revolution in baseball reads like an allegory for defense. “You didn’t have to look at big league baseball very closely,” he writes, “to see its fierce unwillingness to rethink anything.” He goes on to quote Bill James as saying, “The people who run baseball are surrounded by people trying to give them advice. So they’ve built very effective walls to keep out anything.” Lewis notes that baseball insiders “believed they could judge a player’s performance simply by watching it,” and that “conventional opinions about baseball players and baseball strategies had acquired the authority of fact.” In one of the most pivotal passages of the book, Lewis quotes at length from Robert “Vörös” McCracken, one of the baseball outsiders who turned conventional wisdom on its head. McCracken could just as well have been speaking about the military:“The problem with major league baseball is that it’s a self-populating institution. Knowledge is institutionalized. The people involved with baseball who aren’t players are ex-players. In their defense, their structure is not set up along corporate lines. They aren’t equipped to evaluate their own systems. They don’t have the mechanisms to let in the good and get rid of the bad.”

The military, like baseball, tends to be a self-populating institution that sets up barriers to resist outside influence. Many in the military think they can judge readiness by its inputs, much like baseball scouts thought they could judge players by their appearance. I am merely suggesting that we look at the stats. I don’t know how many flying hours it takes to kill a terrorist, but I have an idea for how we could figure that out. Unfortunately, it only takes one memo to kill a good idea.