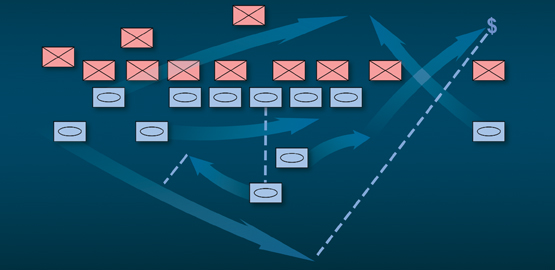

On average, it took 1,000 sorties of B-17 bombers dropping nearly two-and-a-half million pounds of “dumb” bombs to successfully knock out a significant Nazi target in 1944. By contrast, during the 2003 invasion of Iraq, a B-2 bomber could reliably achieve the same result with a single 2,000 pound “smart” bomb—and then go on to strike up to fifteen more targets in a single mission.

This level of accuracy proved to be an unprecedented and powerful force multiplier and was a central component of the so-called “second offset” strategy in the closing stages of the Cold War. By pushing the technological envelope, the U.S. and its allies could equalize the odds in any defense against the numerically superior Warsaw Pact in a hypothetical conflict. These same technologies were employed to deter aggression from smaller states by credibly holding the depth of their crucial command and logistic networks at risk, all while minimizing risks for civilians—a capability demonstrated in the Gulf War. The United States’ unique advantages in delivering precision strikes thus helped keep the peace in Europe and elsewhere while minimizing the number of American boots on the ground, quickly becoming a bedrock of U.S. combat and deterrence operations the world over.

Twelve years later, the story is very different. According to a report released this summer by the Center of Strategic and Budgetary Assessment, the U.S. monopoly on precision guided munitions (PGM) is ending.